One of the things we do best is to make sense of data. We've just created the world's first NVIDIA DGX-1 HPC cluster for deep learning.

Image: Flickr/Bill Ward

One of the things we do best here is to make sense of data.

We analyse it, interpret it, and model it to create knowledge that helps make better decisions for the prosperity, quality of life and future sustainability of Australia and humanity.

All this data crunching requires really powerful computers to make finding answers to our big questions possible within a realistic time frame (unlike Deep Thought, we don’t have seven and a half million years to answer our big questions!).

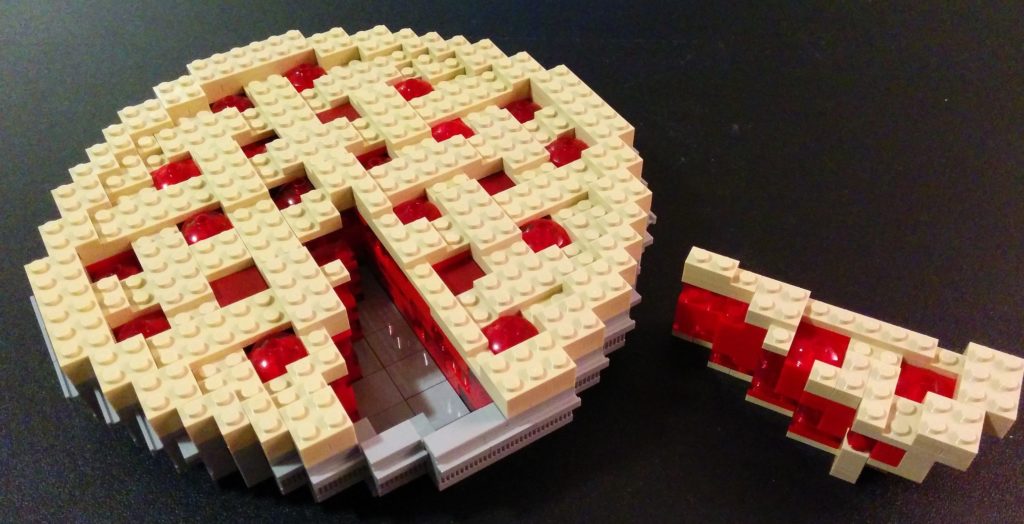

We’ve baked our high-performance computing (HPC) pie from a range of ingredients to cater to a wide variety of appetites.

Our latest serve of HPC pie

One emerging area of computational processing we’re using is deep learning, a form of artificial intelligence.

To help fulfill this need, we’ve just installed the first two NVIDIA DGX-1 computers in the Asia Pacific region (below), and turned them into the world’s first DGX-1 high-performance cluster.

Sitting in our Canberra data centre alongside their larger siblings Bragg, Pearcey and Ruby, these new GPU-based computers use their massive parallel computing power to enable our scientists to run complex algorithms and processing pipelines on big sets of data.

So what exactly is deep learning?

Deep learning is the latest evolution of machine learning artificial intelligence, and will help make things like driverless cars a reality.

Making driverless cars a reality (click for larger image) – These images from Data 61 researcher Jose Alverez’s team show their work towards driverless cars. The figure above shows a scene around a car where the system has assigned two labels to the scene.

It gives computers the ability to see, learn and react quickly to complex situations through applications such as computer vision, speech recognition, and natural language processing.

At its most basic, deep learning uses algorithms to process data, to learn from it, and to predict an outcome. To be able to do this quickly and accurately, the machine must be ‘trained’ with large amounts of data until it’s consistently reaching the desired outcomes on its own, which takes lots of computational power.

What will we be using it for?

Much of our researchers’ work involves wading through a deluge of data to identify what’s important among the background ‘noise’, and deep learning allows them to tackle these big data challenges rapidly and in new ways.

In a similar same scene to above, the system has used seven labels and assigned one of the labels to every pixel in the image.

Our scientists are interested in adopting deep learning to drive their research into new and exciting directions, and we expect that in time, people from all areas will be using deep learning in their research.

So far we already have a bunch of projects using our new DGX-1s. Our Health & Biosecurity team is uncovering the epi-genomic effect on disease proteins, and developing enhanced MRI; our Data61 researchers are modelling nanoparticles; and our astronomers hope to search for pulsars and new objects and events from the huge data sets they’ve observed with the Parkes radio telescope.

We also have our fingers in some other pies!

As well as running our own HPC and data infrastructure, we also help fund the operations of Australia’s two peak research computing facilities, National Computing Infrastructure (NCI) at the Australian National University in Canberra, and the Pawsey Supercomputing Centre at our Kensington Campus in WA, as well as partnering with other specialised facilities such as QCIF in Queensland, and MASSIVE at the Australian Synchrotron and Monash University.

Would you like a slice of pie?

Our supercomputing facilities are available to both international and Australian users from industry and research.