Barracking for the wrong team. Here are Obama’s words and face being manipulated by writer and comedian, Jordan Peele

Can you remember that helpless feeling when someone has misinterpreted something you’ve said and quoted it out of context? Having words put in your mouth can not only be humiliating, but profoundly destructive. Imagine then, instead of your words being taken out of context, someone went through and scanned every photo and video you’ve ever uploaded to Facebook and created a programmable 3D model of your face to make it look like you’ve said something which in reality, you’ve never uttered.

This is just what happened to Barrack Obama —former US President —last week. And it’s not an anomaly. The videos, known as deepfakes, emerged first on Reddit and quickly spread elsewhere gathering technological advances as they went, from clearly fabricated knock-offs to reiterated and expertly crafted renderings.

Jordan Peele, an American comedian and director, is behind this deepfake video of Obama, made using Adobe After Effects and the AI face-swapping tool called FakeApp. FakeApp was also behind the fake video that plastered Gal Gadot’s face onto an actor in a pornographic film.

The message behind this video is clear. Peele (as Obama) says: ‘This is a dangerous time. Moving forward we need to be more vigilant with what we trust on the internet.’

In fact, it might just be a harbinger, a hint of the troubling and perhaps disastrous future of the technology. But before we talk of falling skies, let’s talk about how it works.

The term ‘deepfake’ is a combination of ‘deep-learning’ and ‘fake’. The ‘deep-learning’ component comes from the technology used to create these clips, a form of learning done by machines that’s not just simple task-by-task processing, but more holistic learning, taking in large amounts of data to form meaningful and telling conclusions—similar to what goes on in our own heads.

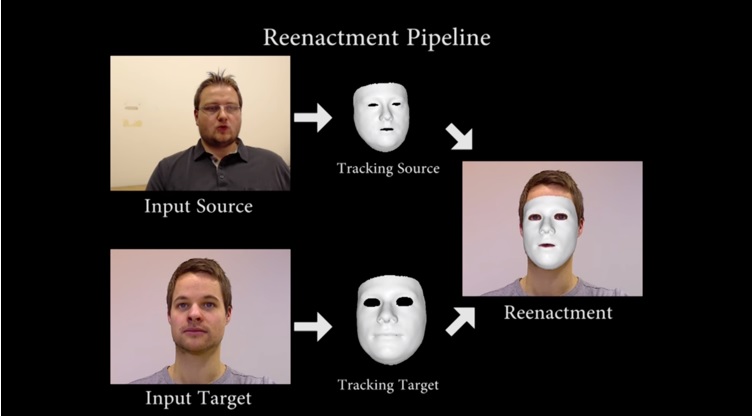

So how does it work? You find a public figure, the more public the better, take a load of video and sound footage of them and feed it into a computer. With enough footage, and enough data points, the computer is able to ‘learn’ a face, the expressions, and the plausible options for each data point at any one time.

Deepfake software.

You might hope that involuntary ex-presidential addresses and other nefarious applications for deep-learning were limited to dystopian sci-fi, or even powerful super-computers, but the hard truth is, the software has been made freely available and works with any decent computer.

As we mentioned, the software can be applied to any public figure, is not limited to pre-recorded footage. In the short time since it was developed it has progressed leaps and bounds to be nearly indistinguishable from any legitimate clip. Indeed, researchers have recently developed software that maps facial movements and words onto live footage, able to tamper with that of, say, an election night or national public service announcement.

When the public leaves their scrutiny at the door to rely on the media for what’s true and what’s not, they expose themselves to any and all the weaknesses of that outlet. In the past, misinformation was spread by traditional media, TV or newspapers, through poor or biased reporting. Today, though, deception is disseminated by all of us over social media, through fake news and manipulated imagery, and now in the case of deepfakes, exploited videos and public figures as puppets for any agenda anywhere.

So what can we do? Get prepared. That’s what computer science researchers like Richard Nock, the head of the Machine Learning Group at our Data61, have suggested. Richard spoke recently on some of the potential measures and solutions for deepfake technology. ‘

There are several solutions,’ said Richard. ‘One is a classic solution, called fingerprinting or watermarking, where you put some signals in the videos which help you figure out whether it’s an original or a fake — and [further], the same technology that can actually be used to create these fakes can also be used to detect these fakes.’

Machine learning is a powerful technology, and one that’s becoming more sophisticated over time. Deepfakes aside, machine learning is also bringing enormous positive benefits to areas like privacy, healthcare, transport and even self-driving cars.

At Data61, we act as a network and partner with government, industry and universities, to advance the technologies of AI in many areas of society and industry. Central to the wider deployment of these advanced technologies is trust, and much of our work is motivated by maximising the trustworthiness of these new technologies

Fake it off

From emergency detection to better management of a city’s infrastructure – we’re working with our partners to solve today’s problems, and build a safer and more efficient future for the next generation.

Pingback: The Changing Face of Spam – Marketing in the Digital World

10th May 2018 at 2:36 am

This technology was developed by CSIRO and published in 2013 “CSIRO Face Analysis software development kit”

https://research.csiro.au/software/face-analysis/

8th May 2018 at 1:36 pm

Don’t believe anything you hear from a politician or a journalist. Simple

3rd May 2018 at 1:16 pm

It would seem that the only way to be sure of what person said, is to be there in person. That takes us right back to the old fashioned way of communicating!

3rd May 2018 at 9:31 am

We were always told that the camera does not lie. – No more! How long will it be before they can fake DNA evidence, or are they already doing it?